Advancing High-Performance Storage: DapuStor Haishen5 H5100 E3.S SSD

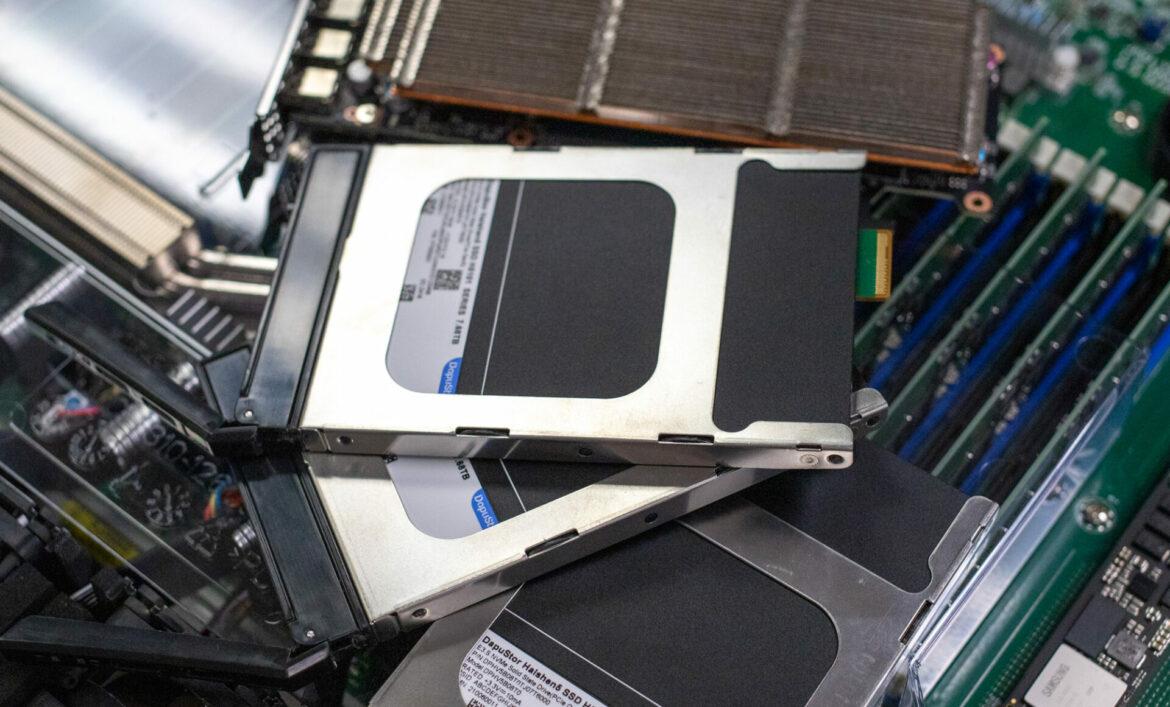

Data is more valuable than ever, so the need for high-performance, reliable, and energy-efficient storage solutions is critical. The DapuStor Haishen5 H5100 E3.S SSD addresses these needs with advanced technology and thoughtful engineering. We put 16 of the H5100s to work to see how fast and capable these modern Gen5 SSDs are.

Data is more valuable than ever, so the need for high-performance, reliable, and energy-efficient storage solutions is critical. The DapuStor Haishen5 H5100 E3.S SSD addresses these needs with advanced technology and thoughtful engineering. We put 16 of the H5100s to work to see how fast and capable these modern Gen5 SSDs are.

DapuStor Haishen5 H5100 E3.S SSD

The H5100 represents a significant leap forward in storage performance, building upon the foundation of DapuStor’s Gen4 SSDs. Leveraging the latest Marvell Bravera PCIe Gen5 enterprise controller, KIOXIA BiCS8 3D TLC NAND, and bespoke DapuStor firmware, this drive offers double the throughput compared to its Gen4 counterparts. With sequential read speeds of up to 14,000 MB/s and write speeds of up to 9,500 MB/s, the Haishen5 H5100 dramatically reduces data access times and latency, critical for modern workloads like those in AI and HPC.

DapuStor Haishen5 H5100 E3.S SSD

Random read and write IOPS reach up to 2.8 million and 380 thousand, respectively, while 4K random read latencies are less than 7 microseconds and write latencies are less than 8 microseconds. These performance enhancements translate to faster data processing, improved system responsiveness, and the ability to handle more intensive workloads, making it an ideal choice for applications that demand high-speed data transfer and storage efficiency.

Modern data centers and hyperscalers face more than just performance challenges. Energy efficiency is increasingly critical. The KIOXIA BiCS8 3D NAND and Marvell Bravera SC5 controller combine to deliver high capacity and power efficiency. The BiCS8’s vertical stacking technology enables up to 32TB with reduced power consumption. In contrast, the Bravera SC5’s dynamic power management and efficient data processing ensure top performance and minimal energy use, making it ideal for demanding enterprise applications.

Flexibility in design is critical, too. As new servers migrate from U.2 to E3.S and hyperscalers, even NVIDIA has several applications for E1.S, SSD vendors need to support a wider variety of form factors. With the H5100, it’s essential to note that DapuStor supports the legacy U.2 form factor in Gen5. They also support 3.84TB and 7.68TB capacity drives in the E3.S and E1.S EDFFF form factors with several density and efficiency benefits over U.2 drives.

Another interesting aspect of the DapuStor H5100 is the firmware design. Controlling their firmware makes it easier for DapuStor to integrate the way all of the drive’s components interact. This benefit manifests in many ways, ranging from tighter QoS to supporting advanced features like Flexible Data Placement (FDP). DapuStor will customize a drive’s firmware for specific use cases should a customer have requirements outside the standard framework. Customizable features include firmware adjustments, security settings, performance tuning, and power management configurations.

H5100 SSD incorporates advanced Quality of Service (QoS) features to ensure consistent performance and data integrity across various workloads. These QoS features enable the drive to manage and prioritize I/O operations effectively, maintaining low latency and high throughput even under demanding conditions.

FDP technology in the DapuStor H5100 optimizes data management within the drive. Allowing data to be written into different physical spaces, FDP improves performance, endurance, and overall storage efficiency. This advanced feature helps reduce write amplification and enhances the drive’s ability to handle mixed workloads effectively. While seen only in the hyperscaler world right now, FDP is gaining massive momentum within OCP, and thanks to the inherent endurance benefits FDP provides, it won’t be long before more mainstream applications take advantage.

DapuStor Haishen5 H5100 SSD Specifications

| Specification | 3.84TB (E3.S) | 7.68TB (E3.S) | 3.84TB (U.2 15mm) | 7.68TB (U.2 15mm) | 15.36TB (U.2 15mm) | 30.72 B (U.2 15mm) | 3.84TB (E1.S) | 7.68TB (E1.S) |

|---|---|---|---|---|---|---|---|---|

| Interface | PCIe 5.0 x 4, NVMe 2.0 | |||||||

| Read Bandwidth (128KB) MB/s | 14000 | 14000 | 14000 | 14000 | 14000 | 14000 | 14000 | 14000 |

| Write Bandwidth (128KB) MB/s | 6300 | 8800 | 9500 | 9500 | 4800 | 5000 | 6300 | 8800 |

| Random Read (4KB) KIOPS | 2800 | 2800 | 2800 | 2800 | 2800 | 2800 | 2800 | 2800 |

| Random Write (4KB) KIOPS | 300 | 380 | 300 | 380 | 380 | 380 | 200 | 200 |

| 4K Random Latency (Typ.) RW µs | 57/8 | 54/8 | 56/8 | 54/8 | 54/8 | 54/8 | 57/8 | 54/8 |

| 4K Sequential Latency (Typ.) RW µs | Read Latency 7 µs/Write Latency 8 µs | |||||||

| Typical Power (W) | 18 | 18 | 18 | 19 | 19 | 19 | 17.5 | 17.5 |

| Idle Power (W) | 7 | 7 | 7 | 5 | 5 | 5 | 7 | 7 |

| Flash Type | 3D eTLC NAND Flash | |||||||

| Endurance | 1 | |||||||

| MTBF | 2.5 million hours | |||||||

| UBER | 1 sector per 10^17 bits read | |||||||

| Warranty | 5 years | |||||||

Performance Results

To better understand how well the DapuStor Haishen5 H5100 E3.S SSDs perform, we tested 16 7.68TB drives in a Supermicro storage server. The Supermicro Storage A+ ASG-1115S-NE316R is a high-performance 1U rackmount server for data-intensive applications. It supports 16 hot-swap E3.S NVMe drives, making it an ideal testbed for these SSDs. This server is powered by a single AMD EPYC 9634 84-core CPU and 384GB of DDR5 ECC memory.

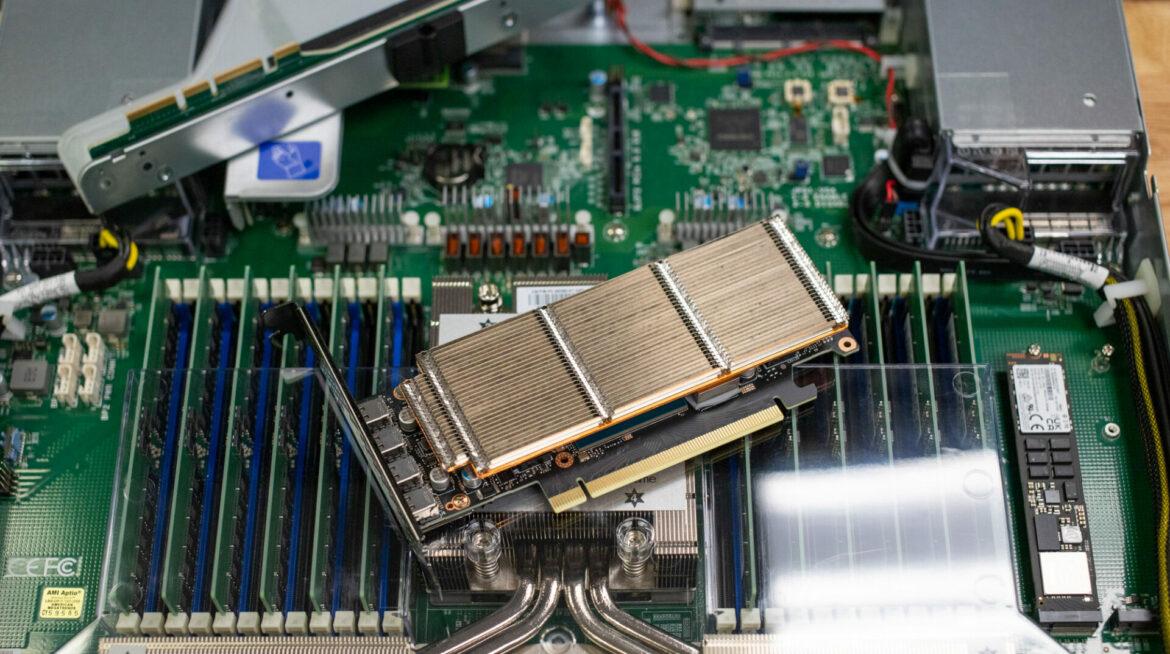

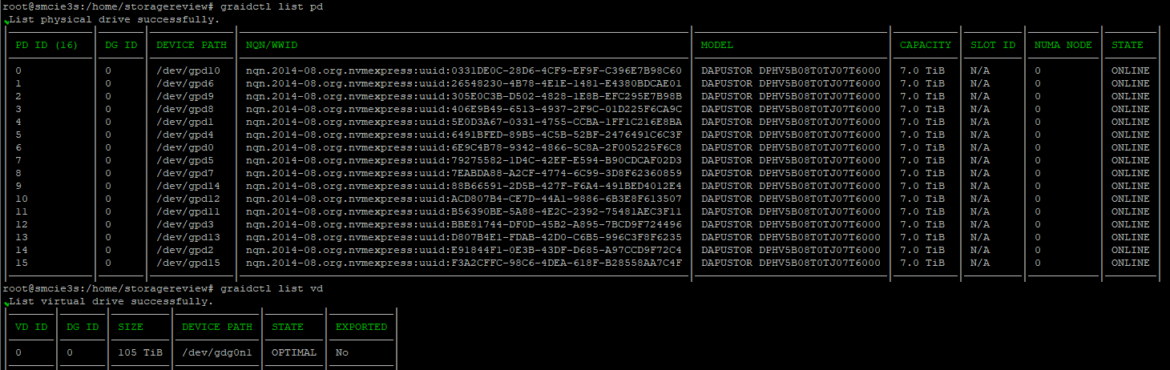

We used the Graid solution to aggregate the DapuStor SSDs. Graid offloads write parity calculation work to a GPU, freeing up system resources for other processes. For PCIe Gen4/5 platforms, Graid currently uses the NVIDIA A2000 GPU. The stock double-width card with an active air cooler on most platforms will suffice. In this Supermicro 1U platform with two single-width slots, however, Graid has a solution. They have a modified version of the NVIDIA A2000 with a thin passive cooler, allowing its use in server platforms with airflow but not the space for something similar to a thicker GPU.

With Graid in place, we aggregated the storage into a large RAID5 pool, combining 16 of the 7.68TB DapuStor Haishen5 H5100 E3.S SSDs to create a 105TB volume. Graid’s default stripe size for volumes is 4KB. While JBOD flash performance can provide higher performance, there’s a risk of total data loss if any SSD fails. A RAID solution protects from that drive loss event and is a better choice for this testing scenario.

With 16 DapuStor Haishen5 H5100 PCIe Gen5 SSDs in a large HW RAID5 Graid group, we start with peak bandwidth and peak I/O tests. This is an important consideration for customers. Parity protection is crucial to prevent data loss in case of drive failure. However, it’s essential to avoid introducing too much overhead that might limit system performance.

Looking at the peak read bandwidth focusing on a 1MB data transfer size, we witnessed an incredible 205GB/s from this RAID group. That works out to 12.8GB/s per drive for the 16-drive RAID5 group. In sequential write, we measured 105GB/s aggregate or 6.6GB/s per SSD. These come close to the spec sheet numbers for the SSDs of 14GB/s read and 5GB/s write.

We look at 4K random transfer speeds to measure the peak throughput. 4K random read topped out at 18.1M IOPS and 52.4GB/s, while 4K random write was 1.873M IOPS and 7.7GB/s.

Peak Throughput and Bandwidth | DapuStor 7.68TB x 16 HW RAID Throughput | DapuStor 7.68TB x 16HW RAID Bandwidth | DapuStor 7.68TB x 16HW RAID Latency |

| 1MB sequential read (84T/16Q) | 129k IOPS | 205GB/s | 6.9ms |

| 1MB sequential write (84T/16Q) | 100k IOPS | 105GB/s | 13.4ms |

| 4K random read (84T/32Q) | 12.8M IOPS | 52.4GB/s | 0.21ms |

| 4K random read (84T/256Q) | 18.1M IOPS | 74.3GB/s | 1.184ms |

| 4K random write (84T/32Q) | 1.873M IOPS | 7.7GB/s | 0.717ms |

While static read or write tests are important when measuring peak bandwidth or throughput, mixed I/O performance across a wide range of block sizes shows how storage performs in more traditional use cases.

We start with a 4K block size, ranging between 70% to 90% read percentage. With a 70% read, 30% write random workload applied to the DapuStor Haishen5 H5100 16 SSD RAID group, we measured a throughput of 4.173M IOPS and 17.1GB/s. This is while maintaining an average latency of just 0.644ms. Increasing the read mixture to 80%, the throughput increased to 5.762M IOPS and 23.6GB/s. At a 90% read mixture, the performance continued to scale up to 7.36M IOPS and 30.1GB/s.

Mixed 4K Random Throughput and Bandwidth | DapuStor 7.68TB x 16HW RAID Throughput | DapuStor 7.68TB x 16HW RAID Bandwidth | DapuStor 7.68TB x 16HW RAID Latency |

| 4K Random 70/30 (84T/32Q) | 4.173M IOPS | 17.1GB/s | 0.644ms |

| 4K Random 80/20 (84T/32Q) | 5.762M IOPS | 23.6GB/s | 0.466ms |

| 4K Random 90/10 (84T/32Q) | 7.360M IOPS | 30.1GB/s | 0.365ms |

When increasing the block size to 8K, we get closer to the traditional database and OLTP workloads. Here, the 16 Gen5 SSD HW RAID group continued to impress us with its incredible performance. With a 70% read mixture, we measured 2.956M IOPS or 24.3GB/s. At an 80% read mixture, throughput increased to 4.024M IOPS, and bandwidth rose to 33GB/s. At the 90% read mix, we measured a strong 5.939M IOPS at 48.7GB/s, with an average latency of just 0.452ms.

Mixed 8K Random Throughput and Bandwidth | DapuStor 7.68TB x 16 HW RAID Throughput | DapuStor 7.68TB x 16 HW RAID Bandwidth | DapuStor 7.68TB x 16 HW RAID Latency |

| 8K Random 70/30 (84T/32Q) | 2.956M IOPS | 24.3GB/s | 0.909ms |

| 8K Random 80/20 (84T/32Q) | 4.024M IOPS | 33GB/s | 0.668ms |

| 8K Random 90/10 (84T/32Q) | 5.939M IOPS | 48.7GB/s | 0.452ms |

The 16K block size scored the best results in our random workload testing. With the GPU-accelerated HW RAID pulling the 16 H5100 Gen5 SSDs together in RAID5, we could drive up the usable bandwidth of the platform. Starting with a 70% read mixture, we measured 1.938M IOPS and 31.7GB/s. At 80% read, that increased to 2.484M IOPS and 40.6GB/s, nearing just 1ms of average latency. At the 90% read peak, the storage array was able to measure 3.63M IOPS and 59.4GB/s of total bandwidth, an incredible figure considering this is random I/O hitting the array.

Mixed 16K Random Throughput and Bandwidth | DapuStor 7.68TB x 16 HW RAID Throughput | DapuStor 7.68TB x 16 HW RAID Bandwidth | DapuStor 7.68TB x 16 HW RAID Latency |

| 16K Random 70/30 (84T/32Q) | 1.938M IOPS | 31.7GB/s | 1.386ms |

| 16K Random 80/20 (84T/32Q) | 2.484M IOPS | 40.6GB/s | 1.082ms |

| 16K Random 90/10 (84T/32Q) | 3.630M IOPS | 59.4GB/s | 0.740ms |

Conclusion

High-performance SSDs like the DapuStore Haishen5 H5100 are crucial for advanced applications. In artificial intelligence and machine learning, these SSDs accelerate data processing, enabling faster model training and real-time analytics. For big data analytics, they ensure quick data retrieval and analysis, supporting informed business decisions. In high-frequency trading, they provide the low latency and high-speed transactions required. Additionally, the Haishen5 H5100 E3.S delivers consistent and fast data access for virtualization and cloud computing, which is vital for maintaining efficient and reliable virtualized environments. Virtually every use case can benefit from the significant performance and efficiency gains that Gen5 SSDs offer.

In our testing, the H5100 SSDs provided incredible performance in our dense 1U server. It is a versatile solution for various high-performance applications, helping enterprises meet their evolving data storage needs. We focused on GPU-accelerated HW RAID performance with a Graid SupremeRAID setup. This allowed us to maintain the strong performance of 16 PCIe Gen5 SSDs in this server without compromising storage integrity to a JBOD or RAID0 configuration. This setup saw highlights such as an incredible 205GB/s read and 105GB/s write sequential bandwidth with a 1MB transfer size. Random I/O performance was also strong, measuring an impressive 18.1M IOPS read and 1.9M IOPS write in a 4K transfer test.

What’s as exciting as the in-box performance is the potential for sharing data across the network. Although it’s early days, we are experimenting with this DapuStor setup and Broadcom 400GbE OCP NICs. With two of these NICs in the 1U box, we expect to achieve approximately 80GB/s of shared storage performance. For tasks like AI training or real-time data visualization, a fast network and fast storage are key to maximizing GPU utilization. We anticipate more developments with this impressive platform.

Pho Tue SoftWare Solutions JSC

Pho Tue SoftWare Solutions JSC là Nhà Cung cấp dịch Trung Tâm Dữ Liệu, Điện Toán Đám Mây Và Phát Triển Phần Mềm Hàng Đầu Việt Nam. Hệ Thống Data Center Đáp Ứng Mọi Nhu Cầu Với Kết Nối Internet Nhanh, Băng Thông Lớn, Uptime Lên Đến 99,99% Theo Tiêu Chuẩn TIER III-TIA 942.

Leave a comment

Your email address will not be published. Required fields are marked *